Final Project: Wayfinding for tagAR process

by Lenny Martinez

- December 11

- in

Wayfinding for tagAR by Lenny Martinez

Link to my final project pitch: http://www.vrstorytelling.org/assingment-07-final-pitch/

This semester I chose to do an apprenticeship with Renée Stevens who has been working on an Augmented Reality app for iOS devices. Her app is named tagAR and you can see a quick prototype here: https://vimeo.com/229070725. The goal of the app is to replace the "Hello, my name is" tags at conferences and help better connect people using the power of AR technology and the phones we always carry around.

For my final project, I was working on implementing what I learned on a new feature for the app, the wayfinding system. The idea was that one of the most common questions of a workshop or conference setting was: "Where is Person X?" The way it was being handled by tagAR currently was that you could search for someone who was at the conference and you'd get a map with walking directions to the person you were looking for.

While that worked, I thought it would be neat to also have an AR-based system for guiding the user to the person they were looking for. The idea was to take the user's location as the origin, and create an arrow that would show up on the map, and always be pointing in the direction of the person they were looking for. This arrow would be accompanied by some text displaying an ETA, which provides context for how long the search will take.

In Unity, something like this could be achieved using the SmoothLookAt action in Playmaker. But I was working in Swift and not C# or JavaScript (Unity supported languages).

When I first sat down with Renée to discuss my plans for this, we agreed make the destination something we knew wouldn't move—like a coffee shop. This way, I could focus on testing the feature: having an arrow point to a destination and show the ETA.

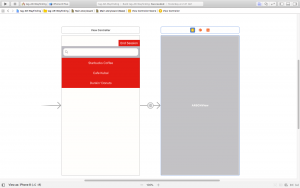

I began my work by building the views of the app, and kept them simple for demo purposes and then tried building the location and transformation features around the AR scene. The flow for the experience (demo-wise) was:

1. Tap the coffee shop you wanted to travel to

2. AR scene loads with the arrow pointing to the desired

3. Once you reach the coffee shop, you click End Session to stop the tracking and exit the experience.

(The two views: left is for step 1; right is for steps 2 and 3)

After a while, working like this became really difficult because I couldn't test my location-based aspects.

So, starting with a tutorial(https://www.youtube.com/watch?v=nhUHzst6x1U) that Renée shared with me, I rebuilt the app starting with the mapping parts first. The navigational components in AR could be built after we got the functionality to work. From following this tutorial I learned where the user's location was stored, and where the desired location could be stored for later use.

Once I understood where the information I would need was, I got rid of the map view that I'd created before and began building the augmented navigation scene. For the first part of the augmented navigation, I focused on creating a simple geometric shape and transforming it to point from the user's location to the destination (the location of the person the user was looking for).

I first tried using the visual editor that comes with Xcode, but found it frustrating and settled on adding objects programmatically. After looking at several tutorials, I found a tutorial explaining how to create and place objects into a scene using tapping gestures. I grabbed the creation part without the tapping and then found another tutorial that explained the math that I had been trying to recreate for how to get the rotation matrix. They had no code to show (and were using SpriteKit as opposed to SceneKit) so I'm not sure how well my implementation (based in part on code written by a third party trying to understand what the first person did) is compared to the tutorial. This really has put a dent in the development because I can't figure out how to load the app now without this part crashing and I've been having a hard time testing the rotational aspect of the code.

I've been stuck over the past couple of days trying to figure out how to test and in that process, I fixed the starting location to be inside the apartment I'm staying so I can check the math by hand, but so far I haven't gotten the code to show where it's failing or creating something different from me.

In the process of all this, I've learned to work with Swift and Xcode, using CocoaPods to import code frameworks and modules (Pods) and I learned a whole lot more about coding and designing an experience than I knew before. I wrote more about that process here: http://www.vrstorytelling.org/independent-learning-learning-swift/.

As a post-mortem, I honestly just wished I had been working on this from the start of the semester as opposed to the end. It was really exciting to be able to test the apps I had build my own app experience and then test it on my phone. I spent a lot of the semester exploring what I wanted to do and we honed in this project as I learned more about Swift, AR and VR, and design and this will pay off in the long run, but I'm not happy that I couldn't get my idea fully expressed in code over the last month.

You can find my code so far on github:https://github.com/lennymartinez/NEW600-FinalProject

On github, you can also find a small subset of all the places I've visited to get information for my project.

COMMENTS